How Diaflow Achieved Active-Active Architecture and SOC 2 Compliance in 28 Days

Diaflow, an AI-native automation platform, faced a critical scaling bottleneck: the need to simultaneously deploy multi-region infrastructure and achieve strict regulatory compliance (SOC 2, HIPAA, GDPR) to close enterprise deals. By leveraging CloudThinker's unified AI operations, Diaflow compressed a standard 6-month roadmap into a 4-week sprint, achieving 99.9% uptime and reducing operational toil by 80%.

When we started, I honestly didn't believe this timeline was achievable. We were incredibly impressed by the flexibility and professional ability of the CloudThinker team to dive deep into our specific architecture. They didn't just resolve our technical debt; they helped us achieve the highest security standards and compliance in a very short time. It was a seamless experience from start to finish.

The Friction of Hypergrowth: When Success Becomes a Bottleneck

For platforms like Diaflow, processing over 1 million monthly workflows for thousands of global customers introduces a unique set of infrastructure paradoxes. The systems required to serve current traffic are often insufficient for the security and resilience demanded by the next tier of enterprise contracts.

Diaflow reached a critical inflection point where operational complexity threatened to decelerate product velocity. The technical requirements were threefold and seemingly contradictory:

- Global Latency Reduction: Immediate need for active-active deployment across multiple AWS regions.

- Regulatory Hardening: Pre-requisites for SOC 2, HIPAA, and GDPR certifications to unblock enterprise sales pipelines.

- Velocity Preservation: Preventing the necessary operational overhead (Ops) from slowing down feature shipping cycles.

Traditional scaling methods - hiring specialized Cloud Architects and Security Engineers - presented a 6-to-12-month lead time. In the AI automation market, where speed is the primary differentiator, this timeline was non-viable.

The Paradigm Shift: Unified AI Operations

Diaflow partnered with CloudThinker to move beyond traditional managed services. Instead of augmenting the team with human contractors, CloudThinker deployed a unified solution driven by specialized AI agents.

Figure 1: CloudThinker managed the entire process, providing end-to-end service for a secure and compliant migration to the AWS Cloud.

The solution architecture addressed the challenge through four integrated pillars:

- Cloud Migration & Modernization: Unlike standard "lift and shift" approaches, CloudThinker agents architected a seamless migration to a cloud-native, active-active topology. This ensured zero downtime during the transition and optimized configurations from Day 0.

- Cloud Optimization: To counter the cost spiral of multi-region deployment, intelligent agents handled right-sizing and resource scheduling. This autonomous management drove a 30-40% cost reduction compared to manual provisioning.

- Security Assessment: Security was not treated as an audit step but as a continuous process. Agents performed continuous vulnerability scanning and misconfiguration detection, executing automated remediation across the entire infrastructure without human intervention.

- Compliance: The platform automated the monitoring and reporting required for SOC 2, GDPR, and HIPAA. It generated real-time audit trails and enforced policies programmatically, turning compliance into a background process rather than a manual burden.

The 4-Week Execution Sprint

The transformation was executed in phases, leveraging the four pillars in parallel to minimize rework.

Phase 1: Architecture & Compliance Mapping (Weeks 1-2)

The initiative began with a "Secure-by-Design" methodology.

- Topology Design (Migration): Agents architected a global VPC peering strategy to ensure low-latency inter-region communication.

- Gap Analysis (Compliance): Compliance agents simultaneously scanned the proposed architecture. Vulnerabilities in encryption-at-rest and IAM least-privilege access were identified and remediated in the Terraform definitions before a single resource was provisioned.

Phase 2: The Stateful Challenge (Week 3)

Moving stateless microservices is trivial; distributing state is the primary engineering challenge.

- Database Orchestration: Agents configured multi-region clusters with automated failover logic, tuning replication lag to ensure data consistency across regions.

- Kubernetes (EKS) Orchestration: Agents managed the EKS control plane, establishing node scaling policies and rolling update strategies to ensure the zero-downtime mandate was met.

Phase 3: Intelligent Observability & Handover (Week 4)

The final phase focused on "Day-2 Operations"—ensuring the system remains stable and cost-efficient.

- Self-Healing Logic (Security): An observability mesh was deployed to categorize incidents by severity. Routine issues (e.g., hung pods, resource exhaustion) trigger automated remediation workflows.

- Cost Governance (Optimization): Intelligent Savings Plan recommendations and resource scheduling were activated, ensuring the new global footprint remained within budget.

Operational Breakthrough: Zero-Touch Root Cause Analysis

Perhaps the most significant day-to-day impact was the drastic reduction in Mean Time to Resolution (MTTR)—shifting from days to minutes.

In a distributed architecture, debugging is notoriously difficult. A latency spike could stem from the application layer (Kubernetes), the data layer (Postgres), or the network layer (AWS).

How the Agentic Platform Works: CloudThinker agents ingest and correlate telemetry across the entire stack simultaneously:

- AWS Infrastructure

- Kubernetes

- Database

- Application Logs

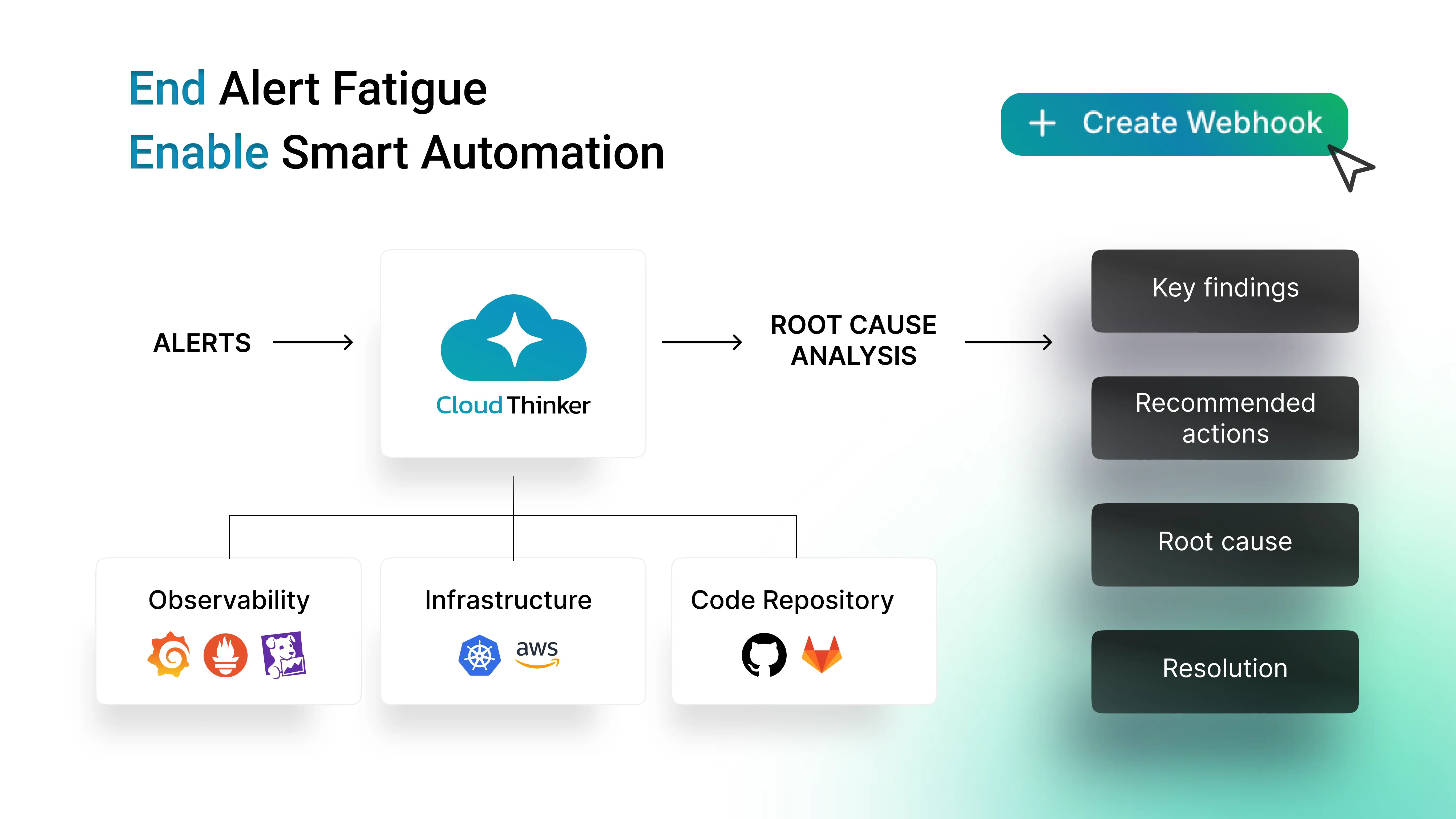

Figure 2: CloudThinker identified the root cause through stack-wide correlation and webhook alerts

Real-World Scenario: The CloudFront/S3 Content-Type Disconnect

Users reported broken image assets—PNG files failed to render with Content-Type: text/xml instead of image/png.

The Old Way (6-24 Hours):

Engineers manually inspect S3 metadata, review CloudFront configs, check cache policies, test URLs, and trace upload pipelines—while users experience broken functionality.

The CloudThinker Way (15 Minutes):

- Detection: Agent instantly identified the CloudFront distribution and correlated Content-Type errors with stale cached responses.

- Analysis: Confirmed S3 had correct metadata (image/png), but CloudFront served cached responses with wrong headers. Root cause: Files uploaded without Content-Type, later fixed in S3, but cache remained stale.

- Solutioning: Created cache invalidation, verified completion, and generated prevention recommendations with code snippets.

- The Result: Multi-hour outage resolved in 15 minutes. Agent delivered both immediate fix and long-term prevention strategies—eliminating manual troubleshooting overhead.

The Quantitative Impact

The deployment yielded metrics that fundamentally altered Diaflow’s unit economics and operational posture:

| Operational Metric | Legacy Trajectory | CloudThinker Outcome |

|---|---|---|

| Global Expansion Timeline | 6 Months | 4 Weeks |

| Compliance Status | Planning Phase | Audit-Ready (Week 3) |

| Cloud Cost Efficiency | Unoptimized | 30-40% Reduction |

| Uptime SLA | 99.5% (Best Effort) | 99.9% (Architected) |

| Manual Ops Load | ~20 hours/dev/week | Reduced by 80% |

Strategic Analysis: The "Compound Interest" of Automation

The immediate value for Diaflow was speed, but the long-term strategic value is operational leverage.

By offloading the cognitive load of infrastructure management to AI agents, Diaflow decoupled their headcount from their scale. In a traditional model, as a SaaS platform grows, the DevOps team must scale linearly to manage complexity. With CloudThinker, the infrastructure optimizes itself.

This shift has redefined Diaflow's engineering culture. Conversations have moved from infrastructure constraints ("Can the database handle this load?") to product opportunities ("How fast can we ingest this new dataset?").

Conclusion

For Diaflow, the integration of CloudThinker was not merely a tool adoption—it was a strategic pivot. By treating infrastructure as an autonomous, intelligent layer rather than a manual dependency, they have secured the ability to scale indefinitely. They are no longer building infrastructure; they are building a product, while the infrastructure builds itself.